|

| Object Recognition and Detection with Deep Learning |

- Time Period: September 2006 - present.

- Participants: Koray Kavukcuoglu, Kevin Jarrett, Y-Lan Boureau, Rob Fergus, Yann LeCun (Courant Institute/CBLL).

- Sponsors: ONR, NSF.

- Description: This is a series of projects whose goal is to

produce category-level object recognition systems with state of the

art performance that can run in real time. The systems are

hierarchical (multi-stage) and use "deep learning" methods

(unsupervised and supervised) to train the features at all levels.

A convolutional network pre-trained with sparse coding methods

and refined with supervised gradient descent achieves over 70% correct

recognition rate on the Caltech-101 dataset.

- MORE INFORMATION, VIDEO >>>>>.

| Sparse Coding for Feature Learning |

- Time Period: 2008 - present.

- Participants: Karol Gregor, Koray Kavukcuoglu, Arthur Szlam, Rob Fergus, Yann LeCun (Courant Institute/CBLL).

- Sponsors: ONR, NSF.

- Description: We are developing unsupervised (and semi-supervised) learning algorithms to learn

visual features. The methods are based on the idea that good feature representation should be high dimensional

(so as to facilitate the separability of the categories), should contain enough information to reconstruct the

input near regions of high data density, and should not contain enough information to reconstruct inputs in

regions of low data density. This lead us to use sparsity criteria on the feature vectors. Many of the sparse coding

methods we have developed include a feed-forward predictor (a so-called encoder) that can quickly produce an

approximation of the optimal sparse representation of the input. This allows us to use the learned feature

extractor in real-time object recognition systems. Variant of sparse coding are proposed, including one

that uses group sparsity to produce locally invariant features, two methods that separate the "what" from

the "where" using temporal constancy criteria, and two methods for convolutional sparse coding, where the

dictionary elements are convolution kernels applied to images.

- MORE INFORMATION >>>>>.

| Action Recognition in Video with Convolutional Networks |

- Time Period: 2009 - present.

- Participants: Graham Taylor, Chris Bregler, Rob Fergus, Yann LeCun (Courant Institute/CBLL).

- Sponsors: DARPA, ONR.

- Description: A trainable system was built to recognize actions in videos.

The first layer is a Convolutional Gated Restricted Boltzmann Machine, which is trained

in an unsupervised manner. It automatically learns features that primarily encode motion.

The second layer uses sparse coding to learn mid-level features in an unspervised manner.

The feature vectors thereby obtained are pooled over time, using a max-pooling operation,

and fed to a Support vector Machine. Excellent performance was obtained on the Hollywood-2

dataset. A similar system was built to recognize actions on the KTH dataset. It also

uses a CGRBM at the first layer, but uses a 3D (spatio-temporal) convolutional network

architecture for the following layers.

- MORE INFORMATION >>>>>.

| Embedded Hardware for Real-Time Vision |

- Time Period: 2008 - present.

- Participants: Clement Farabet, Yann LeCun (Courant Institute/CBLL), Eugenio Culurciello (Yale EE).

- Sponsors: ONR, US Army/STTR, NYU/Poly.

- Description: Our aim is to implement convolutional networks

and other vision algorithms on small, low-power specialized hardware.

As of september 2010, we have an operational implementation of

convolutional networks running on a development board built around a

Xilinx Virtex-6 FPGA. We also have an implementation running on a

custom 8x7cm board built around a Xilinx Virtex-4 FPGA. The system

uses a new architectural concept for high-throughput processing of

streams of data, borrowing ideas from "dataflow" architecture

concepts. The design is called "NeuFlow".

- MORE INFORMATION, VIDEO >>>>>.

| Unsupervised Deep Learning |

- Time Period: September 2004 - present.

- Participants: Marc'Aurelio Ranzato, Koray Kavukcuoglu, Y-Lan Boureau, Yann LeCun (Courant Institute/CBLL).

- Sponsors: DARPA, ONR, NSF.

- Description: Animals and humans can learn to see, perceive,

act, and communicate with an efficiency that no Machine Learning

method can approach. The brains of humans and animals are "deep", in

the sense that each action is the result of a long chain of synaptic

communications (many layers of processing). We are currently

researching efficient learning algorithms for such "deep

architectures". We are currently concentrating on unsupervised

learning algorithms that can be used to produce deep hierarchies of

features for visual recognition. We surmise that understanding deep

learning will not only enable us to build more intelligent machines,

but will also help us understand human intelligence and the mechanisms

of human learning.

- MORE INFORMATION >>>>>.

| EBLearn: A C++ library for deep/energy-based learning and convolutional nets |

- Time Period: 2008 - present.

- Participants: Pierre Sermanet, Koray Kavukcuoglu, Yann LeCun (Courant Institute/CBLL).

- Sponsors: Willow Garage, ONR.

- Description: EBLearn is a C++ library that implements a

number of classes and functions for machine learning and computer

vision. EBLearn makes it easy to construct complex learning machines

by assembling functional blocks. A semi-automatic differentiation

mechanism enables easy gradient-based learning. The Library

implements such models as convolutional networks, and makes it

possible to easily train systems that can detect objects in images

(e.g. faces, pedestrians). The library is platform independent, and

can be compiled on Linux, Windows, Android, and other platform. It

contains a class hierarchy and several functions for manupilating

multi-dimensional tensors. The API is inspired by the machine

learning library in the Lush language.

- MORE INFORMATION >>>>>.

| Dynamic Factor Graphs for Sequential Data Analysis and Prediction |

- Time Period: September 2006 - December 2010.

- Participants: Piotr Mirowski, Yann LeCun (Courant Institute/CBLL).

- Sponsors: ONR.

- Description: A Dynamic Factor Graph models sequential data

such as financial time-series, biological (DNA/protein) sequences,

text, and other modalities. A DFG includes factors modeling joint

probabilities between hidden and observed variables, and factors

modeling dynamical constraints on hidden variables. The DFG assigns a

scalar energy to each configuration of hidden and observed

variables. A gradient-based inference procedure finds the

minimum-energy state sequence for a given observation sequence. Using

smoothing regularizers, DFGs have been shown to reconstruct chaotic

attractors and to separate a mixture of independent oscillatory

sources perfectly. Our DFGs outperformed the best known algorithm

on the CATS competition benchmark for time series prediction. DFGs

also successfully reconstruct missing motion capture data.

- MORE INFORMATION >>>>>.

- Time Period: September 2006 - present.

- Participants: Sumit Chopra, Yann LeCun (Courant Institute/CBLL),

Trivikraman Thampy, John Leahy, Andrew Caplin (Economics Dept, NYU).

- Sponsors: NYU

- Description: We are developing a new type of relational

graphical models that can be applied to "structured regression

problem". A prime example of structured regression problem is the

prediction of house prices. The price of a house depends not only on

the characteristics of the house, but also of the prices of similar

houses in the neighborhood, or perhaps on hidden features of the

neighborhood that influence them. Our relational regression model

infers a hidden "desirability sruface" from which house prices

are predicted.

- MORE INFORMATION >>>>>.

- Time Period: September 2003 - present.

- Participants: Yann LeCun, Sumit Chopra, Marc'Aurelio Ranzato, Y-Lan Boureau, Fu-Jie Huang (Courant Institute/CBLL)

- Sponsors: NSF.

- Description:

Probabilistic graphical models associate a probability to each

configuration of the relevant variables. Energy-based

models (EBGM) associate an energy to those configurations,

eliminating the need for proper normalization of probability

distributions. Making a decision (an inference) with an EBM consists

in comparing the energies associated with various configurations of

the variable to be predicted, and choosing the one with the smallest

energy. Such systems must be trained discriminatively to associate

low energies to the desired configurations and higher energies to

undesired configurations. A wide variety of loss function can be usedo

for this purpose. We give sufficient conditions that a loss function

should satisfy so that its minimization will cause the system to

approach to desired behavior.

- Latest Publication:

- [LeCun et al

2006]. A Tutorial on Energy-Based Learning, in

Bakir et al. (eds) "Predicting Structured Outputs", MIT Press

2006.

- MORE INFORMATION >>>>>.

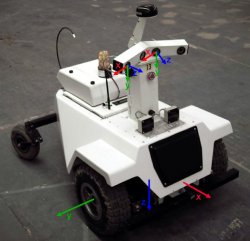

| LAGR: Learning Applied to Ground Robots |

|

Team Leaders:

Yann LeCun (Courant Institute/CBLL),

Urs Muller (Net-Scale Technologies).

Team Members:

Former Team Members:

Sponsor: DARPA

Description:

Our team is one of 8 participants in the LAGR project

funded by the US Government. Each LAGR team receives an indentical

copy of the LAGR robot, built be the National Robotics Engineering

Consortium at Carnegie-Mellon University.

The purpose of the project is to design vision and learning algorithms

to allow the robot to navigate in complex outdoors environment.

MORE INFORMATION >>>>>.

|

|

| Epilepsy Seizure Prediction with Convolutional Networks |

- Time Period: 2006 - 2009.

- Participants: Piotr Mirowski, Yann LeCun (Courant Institute/CBLL).

- Sponsors: NYU Medical Center

- Description: A collaboration between CBLL, Dr. Deepak

Madhavan (University of Nebraska Medical Center), and Dr. Ruben (NYU

Comprehensive Epilepsy Center). In 2009, our methodology achieved the

best known result on the 21-patient EEG public dataset from the

University of Freiburg. Our best algorithm succeeded in predicting all

test seizures with no false positives on 15 patients out of 21. The

method is built around a temporal convolutional network applied to

wavelet-based phase-locking synchrony features.

- MORE INFORMATION >>>>>.

| DAVE: Learning Vision-Based Obstacle Avoidance for Mobile Robots |

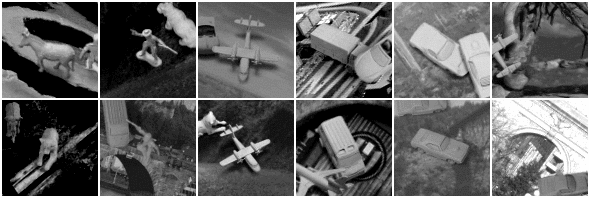

| NORB: Generic Object Recognition in Images |

- Time Period: September 2003 - 2009.

- Participants: Fu Jie Huang, Yann LeCun (Courant Institute/CBLL), Leon Bottou (NEC Labs).

- Sponsers: NSF.

- The recognition of generic object categories with invariance to pose,

lighting, diverse backgrounds, and the presence of clutter is one of

the major challenges of Computer Vision.

We are developing learning systems that can recognize generic object

purely from their shape, independently of pose, illumination, and

surrounding clutter.

- Publications:

- [LeCun, Huang, Bottou, 2004].

Learning Methods for Generic Object Recognition with Invariance to Pose and Lighting

Proceedings of CVPR 2004.

- DatasetDownload the

NORB dataset.

- MORE INFORMATION, VIDEOS, PAPERS >>>>>.

|

|

- Time Period: January 2004 - present.

- Participants: Feng Ning, Yann LeCun (Courant Institute/CBLL),

Leon Bottou (NEC Labs), Fabio Piano (NYU, Biology Dept), Paolo Barbano

(Yale University).

We are using convolutional networks and conditional random fields to

automatically segment movies of developing C. Elegans into regions:

cell nucleus, nucleus membrane, cytoplasm, cell membrane.

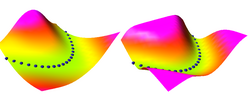

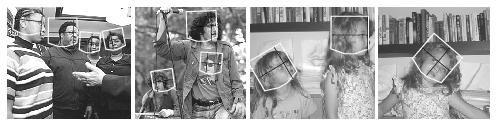

| Simultaneous Face Detection and Pose Estimation |

- Time Period: September 2003-June 2004.

- Participants: Margarita Osadchy (NEC Labs, Technion), Matt Miller (NEC

Labs), Yann LeCun (Courant Institute/CBLL).

- Description:

We developed a novel method for real-time, simultaneous multi-view

face detection and facial pose estimation. The method employs a

convolutional network that maps face images to points on a manifold,

parameterized by pose, and non-face images to points far from that

manifold. This system is trained as an "Energy-Based Model"

with a discriminative loss function.

- Publications:

- Video: watch a video of the system in action:

[AVI, 4.9MB].

- MORE INFORMATION, VIDEOS, PAPERS >>>>>.

|

|